Обнаружение открытого CV объекта: детектор ORB_GPU и экстрактор дескриптора SURF_GPU

Я просто проводил небольшой эксперимент, чтобы поиграть с различными комбинациями детектор / дескриптор.

Мой код использует детектор ORB_GPU для обнаружения функций и дескриптор SURF_GPU для расчета дескрипторов. Я использую BruteForceMatcher_GPU для сопоставления дескрипторов и подаю в суд на метод knnMatch для получения совпадений. Проблема в том, что я получаю много нежелательных совпадений, код буквально соответствует каждой функции, которую можно найти на обоих изображениях. Я довольно смущен с этим поведением. Ниже приведен мой код (версия для графического процессора)

#include "stdafx.h"#include <stdio.h>

#include <iostream>

#include "opencv2/core/core.hpp"#include "opencv2/nonfree/features2d.hpp"#include "opencv2/highgui/highgui.hpp"#include "opencv2/imgproc/imgproc.hpp"#include "opencv2/calib3d/calib3d.hpp"#include "opencv2/gpu/gpu.hpp"#include "opencv2/nonfree/gpu.hpp"

using namespace cv;

using namespace cv::gpu;

int main()

{

Mat object = imread( "140614-194209.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !object.data )

{

std::cout<< "Error reading object " << std::endl;

return -1;

}

GpuMat object_gpu;

GpuMat object_gpukp;

GpuMat object_gpudsc;

vector<float> desc_object_cpu;

std::vector<KeyPoint> kp_object;

int minHessian = 400;

object_gpu.upload(object);

if( !object_gpu.data)

{

std::cout<< "Error reading object " << std::endl;

return -1;

}

GpuMat mask(object_gpu.size(), CV_8U, 0xFF);

mask.setTo(0xFF);

ORB_GPU detector = ORB_GPU(minHessian);

detector.blurForDescriptor = true;

SURF_GPU extractor;

detector(object_gpu,GpuMat(),object_gpukp);

extractor(object_gpu,GpuMat(),object_gpukp,object_gpudsc,true);

BruteForceMatcher_GPU<L2 <float>> matcher;

detector.downloadKeyPoints(object_gpukp,kp_object);

extractor.downloadDescriptors(object_gpudsc,desc_object_cpu);

Mat descriptors_test_CPU_Mat(desc_object_cpu);

VideoCapture cap(0);

namedWindow("Good Matches");

std::vector<Point2f> obj_corners(4);

//Get the corners from the object

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( object.cols, 0 );

obj_corners[2] = cvPoint( object.cols, object.rows );

obj_corners[3] = cvPoint( 0, object.rows );

unsigned long AAtime=0, BBtime=0;

unsigned long Time[110];

char key = 'a';

int framecount = 0;

int count = 0;

while (key != 27)

{

Mat frame;

Mat img_matches;

std::vector<KeyPoint> kp_image;

std::vector<vector<DMatch > > matches;

std::vector<DMatch > good_matches;

std::vector<Point2f> obj;

std::vector<Point2f> scene;

std::vector<Point2f> scene_corners(4);

vector<float> desc_image_cpu;

Mat H;

Mat image;

GpuMat image_gpu;

GpuMat image_gpukp;

GpuMat image_gpudsc;

cap >> frame;

if (framecount < 5)

{

framecount++;

continue;

}

if(count == 0)

{

AAtime = getTickCount();

}

cvtColor(frame, image, CV_RGB2GRAY);image_gpu.upload(image);

detector(image_gpu,GpuMat(),image_gpukp);

extractor(image_gpu,GpuMat(),image_gpukp,image_gpudsc,true);

matcher.knnMatch(object_gpudsc,image_gpudsc,matches,2);

detector.downloadKeyPoints(image_gpukp,kp_image);

extractor.downloadDescriptors(image_gpudsc,desc_image_cpu);

Mat des_image(desc_image_cpu);

for(int i = 0; i < min(des_image.rows-1,(int) matches.size()); i++) //THIS LOOP IS SENSITIVE TO SEGFAULTS

{

if((matches[i][0].distance < 0.6*(matches[i][1].distance)) && ((int) matches[i].size()<=2 && (int) matches[i].size()>0))

{

good_matches.push_back(matches[i][0]);

}

}

//Draw only "good" matches

drawMatches( object, kp_object, image, kp_image, good_matches, img_matches, Scalar::all(-1), Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

if (good_matches.size() >= 14)

{

for( int i = 0; i < good_matches.size(); i++ )

{

//Get the keypoints from the good matches

obj.push_back( kp_object[ good_matches[i].queryIdx ].pt );

scene.push_back( kp_image[ good_matches[i].trainIdx ].pt );

}

H = findHomography( obj, scene, CV_RANSAC );

perspectiveTransform( obj_corners, scene_corners, H);

//Draw lines between the corners (the mapped object in the scene image )

line( img_matches, scene_corners[0] + Point2f( object.cols, 0), scene_corners[1] + Point2f( object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( object.cols, 0), scene_corners[2] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( object.cols, 0), scene_corners[3] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( object.cols, 0), scene_corners[0] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

}

//Show detected matches

imshow( "Good Matches", img_matches );

matcher.clear();

detector.release();

BBtime = getTickCount();

count++;

if(count == 10000)

{

BBtime = getTickCount();

printf("Processing time = %.2lf(sec) \n", (BBtime - AAtime)/getTickFrequency() );

break;

}

extractor.releaseMemory();

detector.release();

key = waitKey(1);

}

return 0;

}

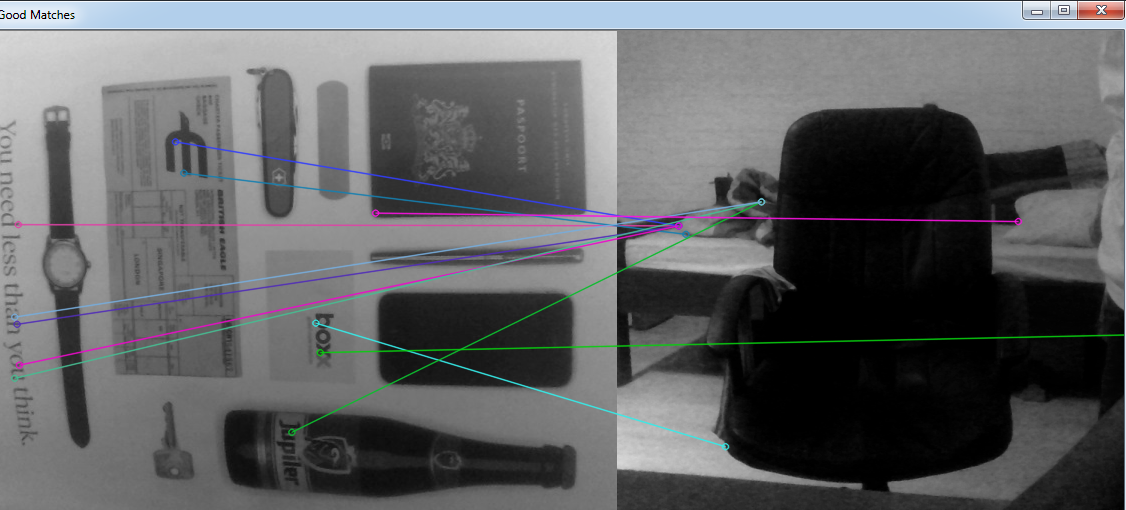

Как видно на рисунке, код дает случайные совпадения чему угодно. Я попробовал то же самое, используя обычные функции процессора, и это прилично точно. Код для версии процессора ниже

#include "stdafx.h"#include <stdio.h>

#include <iostream>

#include "opencv2/core/core.hpp"#include "opencv2/nonfree/features2d.hpp"#include "opencv2/highgui/highgui.hpp"#include "opencv2/imgproc/imgproc.hpp"#include "opencv2/calib3d/calib3d.hpp"

using namespace cv;

int main()

{

Mat object = imread( "140614-194209.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !object.data )

{

std::cout<< "Error reading object " << std::endl;

return -1;

}

//Detect the keypoints using SURF Detector

int minHessian = 500;

OrbFeatureDetector detector( minHessian );

std::vector<KeyPoint> kp_object;

detector.detect( object, kp_object );

//Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat des_object;

extractor.compute( object, kp_object, des_object );

FlannBasedMatcher matcher;

VideoCapture cap(0);

namedWindow("Good Matches");

std::vector<Point2f> obj_corners(4);

//Get the corners from the object

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( object.cols, 0 );

obj_corners[2] = cvPoint( object.cols, object.rows );

obj_corners[3] = cvPoint( 0, object.rows );

char key = 'a';

int framecount = 0;

while (key != 27)

{

Mat frame;

cap >> frame;

if (framecount < 5)

{

framecount++;

continue;

}

Mat des_image, img_matches;

std::vector<KeyPoint> kp_image;

std::vector<vector<DMatch > > matches;

std::vector<DMatch > good_matches;

std::vector<Point2f> obj;

std::vector<Point2f> scene;

std::vector<Point2f> scene_corners(4);

Mat H;

Mat image;

cvtColor(frame, image, CV_RGB2GRAY);

detector.detect( image, kp_image );

extractor.compute( image, kp_image, des_image );

matcher.knnMatch(des_object, des_image, matches, 2);

for(int i = 0; i < min(des_image.rows-1,(int) matches.size()); i++) //THIS LOOP IS SENSITIVE TO SEGFAULTS

{

if((matches[i][0].distance < 0.6*(matches[i][1].distance)) && ((int) matches[i].size()<=2 && (int) matches[i].size()>0))

{

good_matches.push_back(matches[i][0]);

}

}

//Draw only "good" matches

drawMatches( object, kp_object, image, kp_image, good_matches, img_matches, Scalar::all(-1), Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

if (good_matches.size() >= 4)

{

for( int i = 0; i < good_matches.size(); i++ )

{

//Get the keypoints from the good matches

obj.push_back( kp_object[ good_matches[i].queryIdx ].pt );

scene.push_back( kp_image[ good_matches[i].trainIdx ].pt );

}

H = findHomography( obj, scene, CV_RANSAC );

perspectiveTransform( obj_corners, scene_corners, H);

//Draw lines between the corners (the mapped object in the scene image )

line( img_matches, scene_corners[0] + Point2f( object.cols, 0), scene_corners[1] + Point2f( object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( object.cols, 0), scene_corners[2] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( object.cols, 0), scene_corners[3] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( object.cols, 0), scene_corners[0] + Point2f( object.cols, 0), Scalar( 0, 255, 0), 4 );

}

//Show detected matches

imshow( "Good Matches", img_matches );

key = waitKey(1);

}

return 0;

}

Любая помощь будет принята с благодарностью.

Решение

Задача ещё не решена.

Другие решения